The Future of AI: GPT-5 and Beyond - Insights from OpenAI's CEO

In recent discussions around the next generation of artificial intelligence, OpenAI's CEO has made some intriguing statements about GPT-5, leaving the AI community both fascinated and somewhat anxious about the implications. The interview provided a good amount of information, shedding light on the potential capabilities of the future model.

GPT-5 Capabilities and Benchmark Tests

One important insight from the interview centered on the predictive capabilities of the next-generation AI models. By analyzing previous models' performance on test evaluations, we can draw predictions about what GPT-5 might achieve on similar evaluations. OpenAI has released comparative results of GPT 3.5 and GPT-4 on standard benchmark tests, providing valuable insights into the potential capabilities of the upcoming GPT-5.

Unpredictable Emergent Capabilities

However, one caveat was pointed out: the unpredictability of emergent capabilities. This term refers to unforeseen abilities that an AI develops, abilities that experts can't predict beforehand. In a recent AI talk, examples were provided of AI models suddenly gaining new abilities—such as performing arithmetic or answering questions in a previously unknown language—seemingly out of nowhere. These unpredictable emerging capabilities are both exciting and unnerving as they present a profound challenge to our understanding of AI development.

Evolution of AI's Theory of Mind

The conversation also touched upon the fascinating evolution of AI's theory of mind – its capacity to understand and predict what another being might be thinking. This ability, which is fundamental to strategic thinking, has seen a marked evolution in AI models over recent years, with models gradually advancing their strategy level. What's surprising, however, is that this capability often becomes apparent only after the fact.

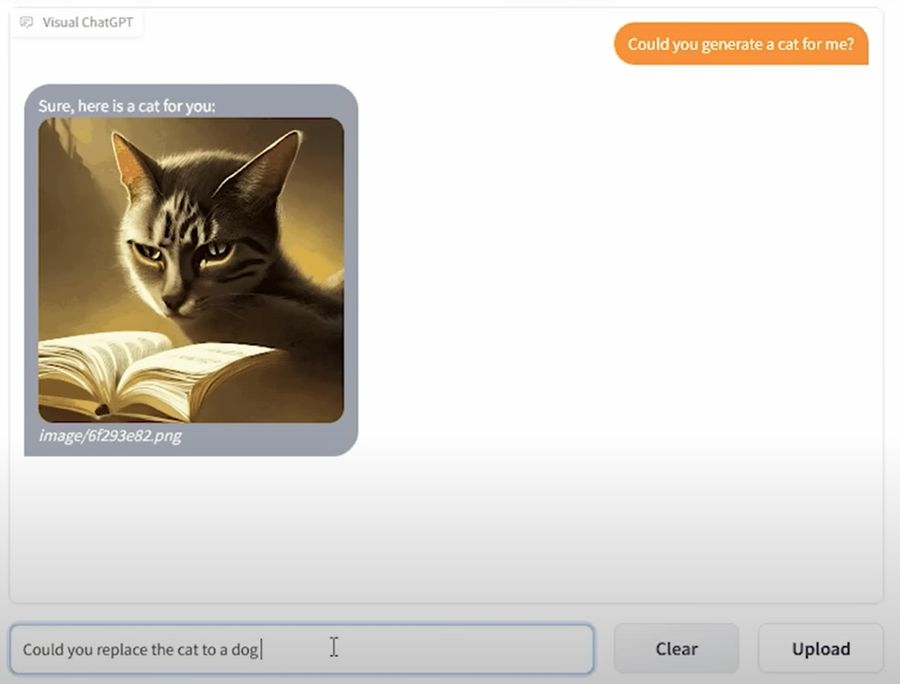

Beyond Text: Multimodalities

One crucial aspect that Sam Altman emphasized in his statements about GPT-5 was the shift towards future modalities. While a lot of focus has been on text, it's becoming increasingly evident that text is only one form of modality. Audio, video, and image modalities also play a significant role in the development of AI. In fact, we've already seen evidence of this in GPT-4, which is set to include some multimodal features, and OpenAI's DALL·E, an AI system that generates images from text descriptions.

Altman highlighted the need for these additional modalities, comparing it to human communication that relies not only on text but also on body language, music, and many other forms of communication.

The Whisper Project

A less-known project that OpenAI is working on, called Whisper, has also sparked interest. It's an AI system trained to approach human-level robustness and accuracy in English speech recognition. Given the importance of audio modality, Whisper might be a precursor to future updates of ChatGPT or even GPT-5, hinting at the potential for real-time verbal interaction with AI models.

Future Expectations

Despite the excitement around GPT-5, it's essential to note that the future AI model is unlikely to be released this year. Sam Altman stated that the training for GPT-5 wouldn't commence for at least another six months. In the meantime, the focus will be on fine-tuning GPT-4 and exploring its multimodal capabilities.

In conclusion, with whispers of new developments in AI becoming louder, the anticipation for GPT-5 and its potential capabilities continue to build. One thing is for certain: the future of AI is here, and it's filled with possibilities we can barely begin to comprehend.