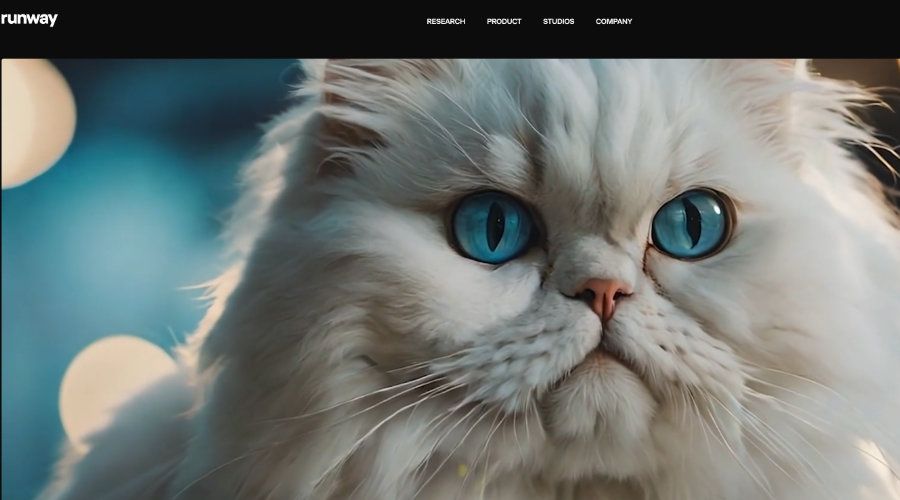

Runway ML with Gen-3 AI Video

Gen-3 Alpha is the first of the next generation of foundation models trained by Runway on a new infrastructure built for large-scale multimodal training. It is a major improvement in fidelity, consistency, and motion over Gen-2, and a step towards building General World Models.

RunwayML is a creative suite that provides tools for content creators, artists, and developers to leverage machine learning (ML) for various creative projects. It offers a range of ML models that can be used for tasks such as video editing, image generation, text-to-image synthesis, style transfer, and more.

Key features of RunwayML include:

- ML Models for Creativity: It hosts a library of pre-trained models that can be used for creative tasks, like generating images, transforming styles, and enhancing video content.

- Video Editing and Generation: RunwayML provides advanced tools for video editing, such as background removal, motion tracking, and AI-powered video generation, often allowing creators to achieve these effects without extensive coding knowledge.

- User-Friendly Interface: The platform is designed with an easy-to-use interface, enabling users who are not experts in ML to apply models and create sophisticated content by dragging and dropping assets or making simple adjustments.

- API and SDK: For developers, RunwayML provides APIs and SDKs, which enable integrating ML models into applications, websites, or other creative software.

- Real-Time Collaboration: It allows for real-time editing and collaboration, which is helpful for teams working together on visual and multimedia projects.

RunwayML is widely used in creative industries such as film, art, and design to speed up content creation and to allow non-technical users to integrate AI into their workflows.